https://neptune.ai/blog/data-augmentation-in-python

Data Augmentation in Python: Everything You Need to Know

Explore data augmentation in Python: its core, image augmentation for DL, library speed comparisons, and more.

neptune.ai

https://neptune.ai/blog/cross-validation-in-machine-learning-how-to-do-it-right

Cross-Validation in Machine Learning: How to Do It Right

Explore the nuances of cross-validation: from k-Fold to time-series methods, with best practices for ML and Deep Learning.

neptune.ai

https://neptune.ai/blog/improving-ml-model-performance

How to Improve ML Models [Lessons From Ex-Amazon]

Industry expert shares strategies for improving the performance of ML/DL systems, covering optimization, model selection, and data handling.

neptune.ai

In machine learning (ML), the situation when the model does not generalize well from the training data to unseen data is called overfitting. As you might know, it is one of the trickiest obstacles in applied machine learning.

The first step in tackling this problem is to actually know that your model is overfitting. That is where proper cross-validation comes in.

After identifying the problem you can prevent it from happening by applying regularization or training with more data. Still, sometimes you might not have additional data to add to your initial dataset. Acquiring and labeling additional data points may also be the wrong path. Of course, in many cases, it will deliver better results, but in terms of work, it is often time-consuming and expensive.

That is where data augmentation (DA) comes in.

What is data augmentation?

Data augmentation is a technique that can be used to artificially expand the size of a training set by creating modified data from the existing one. It is a good practice to use DA if you want to prevent overfitting, or the initial dataset is too small to train on, or even if you want to squeeze better performance from your model.

Let’s make this clear, data augmentation is not only used to prevent overfitting. In general, having a large dataset is crucial for the performance of both ML and Deep Learning (DL) models. However, we can improve the performance of the model by augmenting the data we already have. It means that data augmentation is also good for enhancing the model’s performance.

In general, DA is frequently used when building a DL model. That is why throughout this article, we will mostly talk about performing data augmentation with various DL frameworks. Still, you should keep in mind that you can augment the data for the ML problems as well.

You can augment:

- Audio

- Text

- Images

- Any other types of data

We will focus on image augmentations as those are the most popular ones. Nevertheless, augmenting other types of data is as efficient and easy. That is why it’s good to remember some common techniques which can be performed to augment the data.

Data augmentation techniques

We can apply various changes to the initial data. For example, for images, we can use:

- Geometric transformations – you can randomly flip, crop, rotate or translate images, and that is just the tip of the iceberg

- Color space transformations – change RGB color channels, intensify any color

- Kernel filters – sharpen or blur an image

- Random Erasing – delete a part of the initial image

- Mixing images – basically, mix images with one another. Might be counterintuitive, but it works

For text there are:

- Word/sentence shuffling

- Word replacement – replace words with synonyms

- Syntax-tree manipulation – paraphrase the sentence to be grammatically correct using the same words

- Other described in the article about data augmentation in NLP

For audio augmentation, you can use:

- Noise injection

- Shifting

- Changing the speed of the tape

- And many more

Moreover, the greatest advantage of the augmentation techniques is that you may use all of them at once. Thus, you may get plenty of unique samples of data from the initial one.

Image data augmentation in Deep Learning

As mentioned above, in Deep Learning, data augmentation is a common practice. Therefore, every DL framework has its own augmentation methods or even a whole library. For example, let’s see how to apply image augmentations using built-in methods in TensorFlow (TF) and Keras, PyTorch, and MxNet.

Data augmentation in TensorFlow and Keras

To augment images when using TensorFlow or Keras as our DL framework, we can:

- Write our own augmentation pipelines or layers using tf.image.

- Use Keras preprocessing layers

- Use ImageDataGenerator

Tf.image

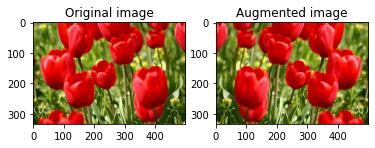

Let’s take a closer look on the first technique and define a function that will visualize an image and then apply the flip to that image using tf.image. You may see the code and the result below.

def visualize(original, augmented):

fig = plt.figure()

plt.subplot(1,2,1)

plt.title('Original image')

plt.imshow(original)

plt.subplot(1,2,2)

plt.title('Augmented image')

plt.imshow(augmented)

flipped = tf.image.flip_left_right(image)

visualize(image, flipped)

For finer control, you can write your own augmentation pipeline. In most cases, it is useful to apply augmentations on a whole dataset, not a single image. You can implement it as follows.

import tensorflow_datasets as tfds

def augment(image, label):

image = tf.cast(image, tf.float32)

image = tf.image.resize(image, [IMG_SIZE, IMG_SIZE])

image = (image / 255.0)

image = tf.image.random_crop(image, size=[IMG_SIZE, IMG_SIZE, 3])

image = tf.image.random_brightness(image, max_delta=0.5)

return image, label

(train_ds, val_ds, test_ds), metadata = tfds.load(

'tf_flowers',

split=['train[:80%]', 'train[80%:90%]', 'train[90%:]'],

with_info=True,

as_supervised=True,)

train_ds = train_ds

.shuffle(1000)

.map(augment, num_parallel_calls=tf.data.experimental.AUTOTUNE)

.batch(batch_size)

.prefetch(AUTOTUNE)Of course, that is just the tip of the iceberg. TensorFlow API has plenty of augmentation techniques. If you want to read more on the topic please check the official documentation or other articles.

Keras preprocessing

As mentioned above, Keras has a variety of preprocessing layers that may be used for data augmentation. You can apply them as follows.

data_augmentation = tf.keras.Sequential([

layers.experimental.preprocessing.RandomFlip("horizontal_and_vertical"),

layers.experimental.preprocessing.RandomRotation(0.2)])

image = tf.expand_dims(image, 0)

plt.figure(figsize=(10, 10))

for i in range(9):

augmented_image = data_augmentation(image)

ax = plt.subplot(3, 3, i + 1)

plt.imshow(augmented_image[0])

plt.axis("off")

Keras ImageDataGenerator

Also, you may use ImageDataGenerator (tf.keras.preprocessing.image.ImageDataGenerator) that generates batches of tensor images with real-time DA.

datagen = ImageDataGenerator(rotation_range=90)

datagen.fit(x_train)

for X_batch, y_batch in datagen.flow(x_train, y_train, batch_size=9):

for i in range(0, 9):

pyplot.subplot(330 + 1 + i)

pyplot.imshow(X_batch[i].reshape(img_rows, img_cols, 3))

pyplot.show()

break

May be useful

Check how you can track your model-building metadata (like parameters, metrics, learning rate, hardware consumption, and more) using Neptune-Keras integration.

Data augmentation in PyTorch and MxNet

Transforms in Pytorch

Transforms library is the augmentation part of the torchvision package that consists of popular datasets, model architectures, and common image transformations for Computer Vision tasks.

To install Transforms you simply need to install torchvision:

pip3 install torch torchvision

Transforms library contains different image transformations that can be chained together using the Compose method. Functionally, Transforms has a variety of augmentation techniques implemented. You can combine them by using Compose method. Just check the official documentation and you will certainly find the augmentation for your task.

Additionally, there is the torchvision.transforms.functional module. It has various functional transforms that give fine-grained control over the transformations. It might be really useful if you are building a more complex augmentation pipeline, for example, in the case of segmentation tasks.

Besides that, Transforms doesn’t have a unique feature. It’s used mostly with PyTorch as it’s considered a built-in augmentation library.

May be useful

Check how you can track your model-building metadata (like parameters, losses, metrics, images and predictions, and more) using Neptune-PyTorch integration.

Sample usage of PyTorch Transforms

Let’s see how to apply augmentations using Transforms. You should keep in mind that Transforms works only with PIL images. That is why you should either read an image in PIL format or add the necessary transformation to your augmentation pipeline.

from torchvision import transforms as tr

from torchvision.transfroms import Compose

pipeline = Compose(

[tr.RandomRotation(degrees = 90),

tr.RandomRotation(degrees = 270)])

augmented_image = pipeline(img = img)Sometimes you might want to write a custom Dataloader for the training. Let’s see how to apply augmentations via Transforms if you are doing so.

from torchvision import transforms

from torchvision.transforms import Compose as C

def aug(p=0.5):

return C([transforms.RandomHorizontalFlip()], p=p)

class Dataloader(object):

def __init__(self, train, csv, transform=None):

...

def __getitem__(self, index):

...

img = aug()(**{'image': img})['image']

return img, target

def __len__(self):

return len(self.image_list)

trainset = Dataloader(train=True, csv='/path/to/file/', transform=aug)Transforms in MxNet

Mxnet also has a built-in augmentation library called Transforms (mxnet.gluon.data.vision.transforms). It is pretty similar to PyTorch Transforms library. There is pretty much nothing to add. Check the Transforms section above if you want to find more on this topic. General usage is as follows.

Sample usage of MxNet Transforms

color_aug = transforms.RandomColorJitter(

brightness=0.5,

contrast=0.5,

saturation=0.5,

hue=0.5)

apply(example_image, color_aug)

Those are nice examples, but from my experience, the real power of data augmentation comes out when you are using custom libraries:

- They have a wider set of transformation methods

- They allow you to create custom augmentation

- You can stack one transformation with another.

That is why using custom DA libraries might be more effective than using built-in ones.

Image data augmentation libraries

In this section, we will talk about the following libraries :

- Augmentor

- Albumentations

- Imgaug

- AutoAugment (DeepAugment)

We will look at the installation, augmentation functions, augmenting process parallelization, custom augmentations, and provide a simple example. Remember that we will focus on image augmentation as it is most commonly used.

Before we start I have a few general notes, about using custom augmentation libraries with different DL frameworks.

In general, all libraries can be used with all frameworks if you perform augmentation before training the model.

The point is that some libraries have pre-existing synergy with the specific framework, for example, Albumentations and Pytorch. It’s more convenient to use such pairs. Still, if you need specific functional or you like one library more than another you should either perform DA before starting to train a model or write a custom Dataloader and training process instead.

The second major topic is using custom augmentations with different augmentation libraries. For example, you want to use your own CV2 image transformation with a specific augmentation from Albumentations library.

Let’s make this clear, you can do that with any library, but it might be more complicated than you think. Some libraries have a guide in their official documentation of how to do it, but others do not.

If there is no guide, you basically have two ways:

- Apply augmentations separately, for example, use your transformation operation and then the pipeline.

- Check Github repositories in case someone has already figured out how to integrate a custom augmentation to the pipeline correctly.

Ok, with that out of the way, let’s dive in.

Augmentor

Moving on to the libraries, Augmentor is a Python package that aims to be both a data augmentation tool and a library of basic image pre-processing functions.

It is pretty easy to install Augmentor via pip:

pip install AugmentorIf you want to build the package from the source, please, check the official documentation.

In general, Augmentor consists of a number of classes for standard image transformation functions, such as Crop, Rotate, Flip, and many more.

Augmentor allows the user to pick a probability parameter for every transformation operation. This parameter controls how often the operation is applied. Thus, Augmentor allows forming an augmenting pipeline that chains together a number of operations that are applied stochastically.

This means that each time an image is passed through the pipeline, a completely different image is returned. Depending on the number of operations in the pipeline and the probability parameter, a very large amount of new image data can be created. Basically, that is data augmentation at its best.

What can we do with images using Augmentor? Augmentor is more focused on geometric transformation though it has other augmentations too. The main features of Augmentor package are:

- Perspective skewing – look at an image from a different angle

- Elastic distortions – add distortions to an image

- Rotating – simply, rotate an image

- Shearing – tilt an image along with one of its sides

- Cropping – crop an image

- Mirroring – apply different types of flips

Augmentor is a well-knit library. You can use it with various DL frameworks (TF, Keras, PyTorch, MxNet) because augmentations may be applied even before you set up a model.

Moreover, Augmentor allows you to add custom augmentations. It might be a little tricky as it requires writing a new operation class, but you can do that.

Unfortunately, Augmentor is neither extremely fast nor flexible functional wise. There are libraries that have more transformation functions available and can perform DA way faster and more effectively. That is why Augmentor is probably the least popular DA library.

Sample usage of Augmentor

Let’s check the simple usage of Augmentor:

- We need to import it.

- We create an empty augmenting pipeline.

- Add some operations in there

- Use sample method to get the augmented images.

Please pay attention when using sample you need to specify the number of augmented images you want to get.

import Augmentor

p = Augmentor.Pipeline("/path/to/images")

p.rotate(probability=0.7, max_left_rotation=10, max_right_rotation=10)

p.zoom(probability=0.3, min_factor=1.1, max_factor=1.6)

p.sample(10000)Albumentations

Albumentations is a computer vision tool designed to perform fast and flexible image augmentations. It appears to have the largest set of transformation functions of all image augmentation libraries.

Let’s install Albumentations via pip. If you want to do it somehow else, check the official documentation.

pip install albumentations

Albumentations provides a single and simple interface to work with different computer vision tasks such as classification, segmentation, object detection, pose estimation, and many more. The library is optimized for maximum speed and performance and has plenty of different image transformation operations.

If we are talking about data augmentations, there is nothing Albumentations can not do. To tell the truth, Albumentations is the most stacked library as it does not focus on one specific area of image transformations. You can simply check the official documentation and you will find an operation that you need.

Moreover, Albumentations has seamless integration with deep learning frameworks such as PyTorch and Keras. The library is a part of the PyTorch ecosystem but you can use it with TensorFlow as well. Thus, Albumentations is the most commonly used image augmentation library.

On the other hand, Albumentations is not integrated with MxNet, which means if you are using MxNet as a DL framework you should write a custom Dataloader or use another augmentation library.

It’s worth mentioning that Albumentations is an open-source library. You can easily check the original code if you want to.

Sample usage of Albumentations

Let’s see how to augment an image using Albumentations. You need to define the pipeline using the Compose method (or you can use a single augmentation), pass an image to it, and get the augmented one.

import albumentations as A

import cv2

def visualize(image):

plt.figure(figsize=(10, 10))

plt.axis('off')

plt.imshow(image)

image = cv2.imread('/path/to/image')

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

transform = A.Compose(

[A.CLAHE(),

A.RandomRotate90(),

A.Transpose(),

A.ShiftScaleRotate(shift_limit=0.0625, scale_limit=0.50,

rotate_limit=45, p=.75),

A.Blur(blur_limit=3),

A.OpticalDistortion(),

A.GridDistortion(),

A.HueSaturationValue()])

augmented_image = transform(image=image)['image']

visualize(augmented_image)

ImgAug

Now, after reading about Augmentor and Albumentations you might think all image augmentation libraries are pretty similar to one another.

That is right. In many cases, the functionality of each library is interchangeable. Nevertheless, each one has its own key features.

ImgAug is also a library for image augmentations. It is pretty similar to Augmentor and Albumentations functional wise, but the main feature stated in the official ImgAug documentation is the ability to execute augmentations on multiple CPU cores. If you want to do that you might want to check the following guide.

As you may see, this’s pretty different from the Augmentors focus on geometric transformations or Albumentations attempting to cover all augmentations possible.

Nevertheless, ImgAug’s key feature seems a bit weird as both Augmentor and Albumentations can be executed on multiple CPU cores as well. Anyway ImgAug supports a wide range of augmentation techniques just like Albumentations and implements sophisticated augmentation with fine-grained control.

ImgAug can be easily installed via pip or conda.

pip install imgaug

Sample usage of ImgAug

Like other image augmentation libraries, ImgAug is easy to use. To define an augmenting pipeline use the Sequential method and then simply stack different transformation operations like in other libraries.

from imgaug import augmenters as iaa

seq = iaa.Sequential([

iaa.Crop(px=(0, 16)),

iaa.Fliplr(0.5),

iaa.GaussianBlur(sigma=(0, 3.0))])

for batch_idx in range(1000):

images = load_batch(batch_idx)

images_aug = seq(images=images)

Autoaugment

On the other hand, Autoaugment is something more interesting. As you might know, using Machine Learning (ML) to improve ML design choices has already reached the space of DA.

In 2018 Google has presented Autoaugment algorithm which is designed to search for the best augmentation policies. Autoaugment helped to improve state-of-the-art model performance on such datasets as CIFAR-10, CIFAR-100, ImageNet, and others.

Still, AutoAugment is tricky to use, as it does not provide the controller module, which prevents users from running it for their own datasets. That is why using AutoAugment might be relevant only if it already has the augmentation strategies for the dataset we plan to train on and the task we are up to.

Thereby let us take a closer look at DeepAugment that is a bit faster and more flexible alternative to AutoAugment. DeepAugment has no strong connection to AutoAugment besides the general idea and was developed by a group of enthusiasts. You can install it via pip:

pip install deepaugment

It’s important for us to know how to use DeepAugment to get the best augmentation strategies for our images. You may do it as follows or check out the official Github repository.

Please, keep in mind that when you use optimize method you should specify the number of samples that will be used to find the best augmentation strategies.

from deepaugment.deepaugment import DeepAugment

deepaug = DeepAugment(my_images, my_labels)

best_policies = deepaug.optimize(300)

Overall, both AutoAugment and DeepAugment are not commonly used. Still, it might be quite useful to run them if you have no idea of what augmentation techniques will be the best for your data. You should only keep in mind that it will take plenty of time because multiple models will be trained.

It’s worth mentioning that we have not covered all custom image augmentation libraries, but we have covered the major ones. Now you know what libraries are the most popular, what advantages and disadvantages they have, and how to use them. This knowledge will help you to find any additional information if you need so.

Speed comparison of image data augmentation libraries

As you may have already figured out, the augmentation process is quite expensive time- and computation-wise.

The time needed to perform DA depends on the number of data points we need to transform, on the overall augmenting pipeline difficulty, and even on the hardware that you use to augment your data.

Let’s run some experiments to find out the fastest augmentation library. We will perform these experiments for Augmentor, Albumentations, ImgAug, and Transforms. We will use an image dataset from Kaggle that is made for flower recognition and contains over four thousand images.

For our first experiment, we will create an augmenting pipeline that consists only of two operations. These will be Horizontal Flip with 0.4 probability and Vertical Flip with 0.8 probability. Let’s apply the pipeline to every image in the dataset and measure the time.

|

|

Time (seconds)

|

|

Augmentor

|

31.9

|

|

Albumentations

|

10.9

|

|

ImgAug

|

12.04

|

|

Transforms

|

9.8

|

As we have anticipated, Augmentor performs way slower than other libraries. Still, both Albumentations and Transforms show a good result as they are optimized to perform fast augmentations.

For our second experiment, we will create a more complex pipeline with various transformations to see if Transforms and Albumentations stay at the top. We will stack more geometric transformations as a pipeline. Thus, we will be able to use all libraries as Augmentor, for example, doesn’t have much kernel filter operations.

You may find the full pipeline in the notebook that I’ve prepared for you. Please, feel free to experiment and play with it.

|

|

Time (seconds)

|

|

Augmentor

|

28.2

|

|

Albumentations

|

17.7

|

|

ImgAug

|

30.9

|

|

Transforms

|

15.2

|

Once more Transforms and Albumentations are at the top.

Moreover, I used neptune.ai to compare the CPU usage. if we check the CPU-usage graphs in the Neptune app, we will find out that both Albumentations and Transforms use less than 60% of CPU resources.

On the other hand, Augmentor and ImgAug use more than 80%.

As you may have noticed, both Albumentations and Transforms are really fast. That is why they are commonly used in real life.

May be useful

When you track your ML experiments with neptune.ai, the system metrics are logged automatically by default. This includes hardware consumption (CPU, GPU, and memory) as well as console logs (stdout, stderr). See what else you can track and display in the Neptune app.

Best practices, tips, and tricks

It’s worth mentioning that despite DA being a powerful tool you should use it carefully. There are some general rules that you might want to follow when applying augmentations:

- Choose proper augmentations for your task. Let’s imagine that you are trying to detect a face on an image. You choose Random Erasing as an augmentation technique and suddenly your model does not perform well even on training. That is because there is no face on an image as it was randomly erased by the augmentation technique. The same thing is with voice detection and applying noise injection to the tape as an augmentation. Keep these cases in mind and be logical when choosing DA techniques.

- Do not use too many augmentations in one sequence. You may simply create a totally new observation that has nothing in common with your original training (or testing data)

- Display augmented data (images and text) in the notebook and listen to the converted audio sample before starting training on them. It’s quite easy to make a mistake when forming an augmenting pipeline. That is why it’s always better to double-check the result.

- Time the augmenting process and check the number of computational resources involved. As you may have seen above, neptune.ai can help you do that. Do not forget about the time library either.

Also, it’s a great practice to check Kaggle notebooks before creating your own augmenting pipeline. There are plenty of ideas you may find there. Try to find a notebook for a similar task and check if the author applied the same augmentations as you’ve planned.

Final thoughts

In this article, we have figured out what data augmentation is, what DA techniques are there, and what libraries you can use to apply them.

To my knowledge, the best publically available library is Albumentations. That is why if you are working with images and do not use MxNet or TensorFlow as your DL framework, you should probably use Albumentations for DA.

Hopefully, with this information, you will have no problems setting up the DA for your next machine learning project.

Resources

- https://www.techopedia.com/definition/28033/data-augmentation

- https://towardsdatascience.com/data-augmentation-for-deep-learning-4fe21d1a4eb9

- https://machinelearningmastery.com/how-to-configure-image-data-augmentation-when-training-deep-learning-neural-networks/

- https://augmentor.readthedocs.io/en/master/userguide/install.html

- https://albumentations.ai/docs/getting_started/installation/

- https://imgaug.readthedocs.io/en/latest/source/installation.html

- https://github.com/barisozmen/deepaugment

- http://ai.stanford.edu/blog/data-augmentation/

'Python' 카테고리의 다른 글

| Object Distance & Direction Detection for Blind and Low Vision People (0) | 2024.01.26 |

|---|---|

| Audio Data Augmentation in python (0) | 2023.11.02 |

| [python]pyQt5 GUI Designer (0) | 2023.09.15 |

| [Python]Seaborn-Data (0) | 2023.09.05 |

| Hands On Signal Processing with Python (0) | 2023.09.05 |

댓글